So you installed Jenkins helm chart on Kubernetes cluster. Now you want to build Docker images. How would you do that? There are several ways. Today, we’ll focus on creating and using Jenkins Docker in Docker agent for that purpose.

If you later find this article useful take a look at the disclaimer for information on how to thank me.

Introduction

If all you need is Docker in Docker Jenkins agent images, jump straight to the demo. Otherwise, if you want to understand the context better, keep reading.

First, let’s clarify what is Docker in Docker agent in the context of Jenkins builds. In short, CI/CD systems like Jenkins build docker images, push them to some registry and probably run them for testing purposes.

Jenkins living and building Docker images in middle ages

You’ve probably seen Jenkins doing that for you. I’ve seen it too and it was bad. Why? Because Jenkins and its agents were configured and run manually on VMs as Java processes. It has become increasingly frustrating to maintain such a system because installations of Jenkins plugins, tools on agents like docker and maven were manual. That’s why, eventually no one really maintained this system, the plugins not updated and no improvements introduced over time. If such Jenkins serves critical business purposes, then such a state becomes a permanent one. Everybody fears to do anything, so everybody does nothing. And no one really knows when this Jenkins will fall into the abyss.

Jenkins building Docker images in a modern world

You may ask how to run Jenkins and its agents in the modern world? Right, leverage what Kubernetes and Docker may offer. Thanks to these tools, Jenkins and its agents run as Kubernetes pods. Moreover, agents are dynamically provisioned for each build. So, at any given moment, Jenkins controller is the only long running web application. Hence, this way saves a lot of resources and doesn’t require constantly running VMs and/or physical machines for long running agents. It may sound too good. Indeed, to enjoy these benefits, one will have to build images of the controller and the agents.

Docker in Docker Jenkins agent

Today, we focus on the agent which will allow building Docker images. Wait, will we need an image which has Docker daemon installed inside? That may sound challenging, because Docker daemon will run in another (Docker) container. That’s why the name Docker in Docker. Though, because Docker is basically a client-server application, this term may mean 2 different things.

Docker Client or Docker Daemon in Docker?

Firstly, docker client may run inside Docker container. It needs Docker server to interact with. Firstly, it may be Docker daemon which runs the container itself. And the container with Docker client will interact with the daemon via docker socket which is mapped to some path inside the container via volumes. Another option is Docker daemon running inside Docker container and the client is running either in the same container or different one.

Why to use Docker Daemon in Docker?

Firstly, because we may not have control over container runtime in Kubernetes cluster. It could be Docker, but it could be containerd as well. Next, giving access to the host’s Docker daemon via socket to individual builder containers may have security implications. In addition, this way containers will share resources with all the deployed workloads to Kubernetes cluster, something undesirable. Mostly, because single builds could jeopardize critical services like Jenkins itself. You may say, wait, is running docker daemon for each build better in terms of resources consumption? Yes, that may sound heavy. However, these daemons run in isolation from the host’s Docker daemon only for the duration of the builds.

Enough talking. Let’s show how to build an image for Docker in Docker agent and show sample Jenkins builds using it.

Jenkins Docker in Docker Agent demo

Demo Prerequisites

First, you’ll need Docker for building and running images.

Next, you’ll need Kubernetes cluster. If you don’t have one, install on your machine minikube and kubectl.

Then, start Kubernetes cluster using minikube start --profile custom.

If you prefer, you can also repeat this demo on a managed Kubernetes cluster. Check out how easy it is to create Kubernetes Cluster on Linode. Get 100$ credit on Linode using this link. Linode is a cloud service provider recently purchased by Akamai. With this purchase, Akamai became a competitor in the cloud providers market.

Install helm as well. We’ll use it for installing Jenkins in the cluster.

Install Jenkins helm chart

There are several ways to install Jenkins according to an official Jenkins documentation. We’ll install Jenkins using helm. I picked this way because it allows to configure Jenkins easily using values.yaml. More specifically, we’ll configure Jenkins to use Docker in Docker agent image.

Before we install Jenkins, let’s make some preparation steps:

# create dedicated namespace where Jenkins helm chart will be installed

kubectl create namespace jenkinsLet’s configure Jenkins by putting relevant values to values.yaml

- download official helm chart’s

values.yaml

wget -4 https://raw.githubusercontent.com/jenkinsci/helm-charts/main/charts/jenkins/values.yaml- many Jenkins aspects can be configured using

values.yaml, let’s put the minimum necessary for installation of Jenkins onMinikube:- set

serviceTypetoNodePort

- set

- install Jenkins release using below commands:

helm repo add jenkinsci https://charts.jenkins.io

helm repo update

helm install jenkins -n jenkins -f values.yaml jenkinsci/jenkins- after Jenkins images are downloaded and deployed you will see below status:

kubectl get all -n jenkins

pod/jenkins-0 2/2 Running 0 2m57s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S

) AGE

service/jenkins NodePort 10.100.21.112 <none> 8080:3

0653/TCP 2m57s

service/jenkins-agent ClusterIP 10.96.252.104 <none> 50000/

TCP 2m57s

NAME READY AGE

statefulset.apps/jenkins 1/1 2m57sNow, we’ll follow the documentation in full:

- To get Jenkins URL we’ll run below commands:

jsonpath="{.spec.ports[0].nodePort}"

NODE_PORT=$(kubectl get -n jenkins -o jsonpath=$jsonpath services jenkins)

jsonpath="{.items[0].status.addresses[0].address}"

NODE_IP=$(kubectl get nodes -n jenkins -o jsonpath=$jsonpath)

echo http://$NODE_IP:$NODE_PORT/login- Next, navigate to Jenkins URL and login using

adminuser and password that could be retrieved using below commands:

jsonpath="{.data.jenkins-admin-password}"

secret=$(kubectl get secret -n jenkins jenkins -o jsonpath=$jsonpath)

echo $(echo $secret | base64 --decode)That’s how easy it is to raise Jenkins using helm. Chart’s values.yaml provides a nice way to configure almost all aspects of Jenkins in config as code way. Therefore, we’ll put a reference to Docker in Docker agent there as well. Yet, first let’s create the images for this agent.

Create Docker in Docker Agent image

To create Docker in Docker Jenkins agent, we’ll use below 2 images:

- We’ll use the official Docker

dindimage forDocker daemon in Dockerpart. - For

Docker client in Dockerpart, we’ll use image built from belowDockerfile:

FROM jenkins/jnlp-agent-docker

USER root

COPY entrypoint.sh /entrypoint.sh

RUN chown jenkins:jenkins /entrypoint.sh

RUN chmod +x /entrypoint.sh

USER jenkins

ENTRYPOINT "/entrypoint.sh"entrypoint.shbusy waits fordocker-daemonto become available and startsJenkinsagent which will connect toJenkinscontroller usingJenkins remoting.

#!/usr/bin/env bash

RETRIES=6

sleep_exp_backoff=1

for((i=0;i<RETRIES;i++)); do

docker version

dockerd_available=$?

if [ $dockerd_available == 0 ]; then

break

fi

sleep ${sleep_exp_backoff}

sleep_exp_backoff="$((sleep_exp_backoff * 2))"

done

exec /usr/local/bin/jenkins-agent "$@"You can find above files in dind-client-jenkins-agent repository.

I guess I owe you a bit of explanation here, because we said we’ll use Docker daemon in Docker Jenkins agent while additionally mentioning some Docker client in Docker part.

As we said, Jenkins builds will run in dynamically provisioned Kubernetes pods. And as we already know that Kubernetes pods may run more than one container. Hence in our setup, each pod for Docker in Docker agent will have 2 containers. The first one is the container with Docker client. And it’s basically Jenkins agent, because it extends jenkins/jnlp-agent-docker base image. The second one is the container with Docker daemon inside. It will run as a side car container in the same pod.

Finally, let’s build dind-client-jenkins-agent agent image and push it to DockerHub

# to point your shell to minikube's docker-daemon

$ eval $(minikube docker-env)

# build the image

$ docker build -t dind-client-jenkins-agent .

# tag the image

$ docker tag dind-client-jenkins-agent [docker_hub_id]/dind-client-jenkins-agent

# push the image

$ docker push [docker_hub_id]/dind-client-jenkins-agentYou probably wonder how all of this works. It’s time to see this agent in action.

Use Docker in Docker agent

Upgrade Jenkins helm release

Firstly, let’s put references to the agent to Jenkins Helm chart’s values.yaml.

To see default settings for all agents, have a look at the key agent

We’ll put reference and configuration of Jenkins Docker in Docker agent under key additionalAgents:

additionalAgents:

dind:

podName: dind-agent

customJenkinsLabels: dind-agent

image: dind-client-jenkins-agent

tag: latest

envVars:

- name: DOCKER_HOST

value: "tcp://localhost:2375"

alwaysPullImage: true

yamlTemplate: |-

spec:

containers:

- name: dind-daemon

image: docker:20.10-dind

securityContext:

privileged: true

env:

- name: DOCKER_TLS_VERIFY

value: ""Note that because Docker client and Docker daemon run in containers under the same pod, they share the same network namespace and the client can connect to the daemon using localhost:2375. To see why we used privileged and DOCKER_TLS_VERIFY refer to dind image description. You can see full values.yaml in jenkins-dind-demo repository.

Now, let’s upgrade our Jenkins release using new values.yaml:

helm upgrade jenkins jenkinsci/jenkins -n jenkins -f values.yamlRun sample Jenkins job using Docker in Docker agent

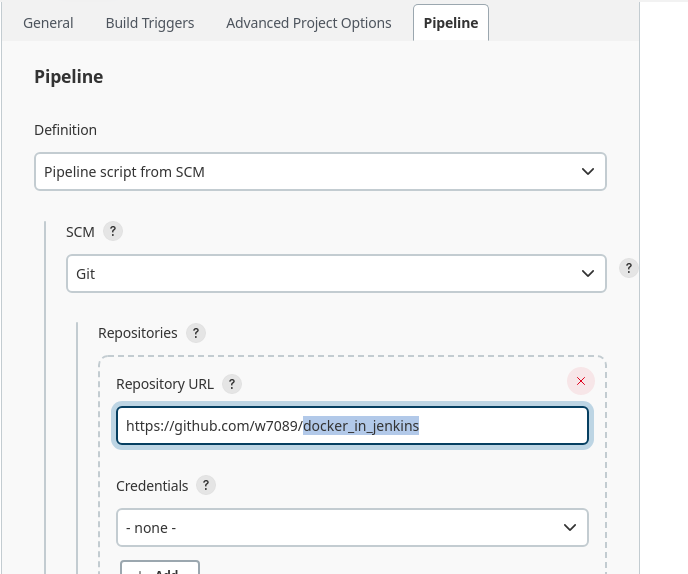

Now, let’s create a sample Jenkins pipeline which will use our Docker in Docker agent. We’ll use it to build Docker image from this repository we used while exploring CI/CD using Jenkins and Docker.

Jenkinsfile is straightforward:

pipeline {

agent {

label 'dind-agent'

}

stages {

stage("build image") {

steps {

script {

sh "docker build jenkins -t docker-in-jenkins"

}

}

}

}

}Pay attention to the agent’s label dind-agent. It’s the same label we have assigned to dind agent in customJenkinsLabels field. That’s why, builds for this pipeline will use our Docker in Docker images while provisioning Kubernetes pods for the builds.

Let’s run the pipeline in Jenkins UI and inspect pods using kubectl while it’s running. Wow, we see that dind pod contains 2 containers (one with docker client and another one with docker daemon) and it was dynamically provisioned just for this build.

$ kubectl get pod -n jenkins

pod/dind-agent-w622h 2/2 Running 0 35s

pod/jenkins-0 2/2 Running 2 23hInspect the job’s Console output to see the actual Kubernetes deployment manifests used to provision the agent. After a while we see that the image was successfully built and the dind pod terminated. Of course, one can use docker push, docker run or any other docker CLI command in the pipeline. That’s cool, isn’t it?

Final words on performance and alternatives

docker cache

Of course, to fully leverage docker in docker agent, one would need to somehow cache docker build results produced on the agent. It would mimic the docker build default behavior.

Podman, Kaniko – daemonless and rootless alternatives

Wait, do we really need Docker daemon agent while there’s Podman and Kaniko? True, these tools don’t require a daemon. So our alternative agent could use just one container (with podman or kaniko inside). Yet, Kaniko is only capable of building and pushing the images, while sometimes it’s useful to run them, e.g. as part of tests. I

Then, there’s Podman which uses the same CLI as Docker. Podman Jenkins Agent I described as well uses it. For example, you can use podman build instead of docker build). It’s indeed lightweight and doesn’t require a daemon Docker needs. Yet, from my understanding it’s still not widely adopted. Have a look, for instance, at the number of questions at Stack Overflow tagged with podman and docker tags and make conclusions yourself.

Moreover, I personally encountered random bugs while building images using podman and there was even a use cases when I was able to use only docker agent. For example, running Minikube inside the agent. So it’s minikube running as a container inside docker daemon container running as part of a pod inside Kubernetes cluster which itself may run in minikube container (as in our demo) which is managed by some host’s docker daemon. That may sound crazy, but there are use cases when we may need it, for example for deploying and testing a helm chart as part of Jenkins pipeline. I covered this use case in Running minikube in Docker container post.

Summary

That’s it about Jenkins Docker in Docker agent. You may find useful my other Kubernetes and Jenkins articles. As always, feel free to share. If you found this article useful, take a look at the disclaimer for information on how to thank me.

- Become a Certified Kubernetes Administrator (CKA)!

- Become a Certified Kubernetes Application Developer (CKAD)!

- BUNDLE KUBERNETES FUNDAMENTALS & CKA CERTIFICATION (COURSE & CERTIFICATION) FOR THE BEST DEAL! $499 ONLY!

Recommended Kubernetes courses on Pluralsight:

Sign up using this link to get exclusive discounts like 50% off your first month or 15% off an annual subscription)

Recommended Kubernetes books on Amazon: